A modeler's manifesto

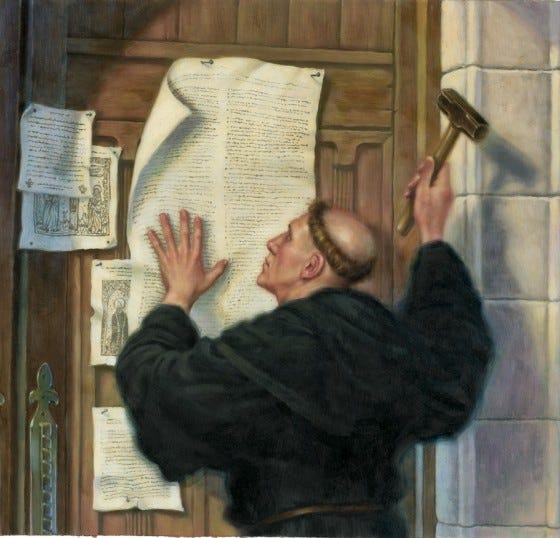

If Martin Luther were a modeler, he'd have written this.

I present a draft of a modeling manifesto. When I started, I was hoping to nail this Martin Luther-style to the door of the Church of Deep Learning.

But I expect this list will grow and evolve because, you know, it’s the Internet. But for now, it’s enough to draw a line in the sand.

I want you to be on my side of the line.

On the modeling vocation

Modelers are makers. Some people like to make things with CNC devices and 3D printers in sheds. Some people make crafts and sell them on Etsy. Modelers make quantitative models of real-world phenomena.

The satisfaction of having made something good is sufficient motivation to model.

A modeler should be stoically equanimous to the prestige of employment and compensation at famous companies. But stoicism ≠ asceticism; negotiate hard, don’t leave money on the table.

When possible, tie your model’s outcomes to bets; skin-in-the-game makes you a better modeler.

(For those in school.) If the teacher is a great teacher, take the class regardless of the subject.

(For those in school.) You’ll do most of your learning online after finishing your program and are out in the field. So take the class if the subject matter looks useful but too hard to learn without the fear of a bad grade acting as motivation. These are usually fundamental ideas that pay dividends throughout your career.

Modeling vs inference; or, where a modeler should spend her time

Models are distinct from inference.

Since modeling is distinct from inference, modeling libraries design should treat them as separate concerns (this is rarely the case).

Favor spending time learning more about the domain you are modeling. It makes modeling more fulfilling, and big tech can’t automate away domain expertise.

Hyperparameter-tuning (changes to a learning algorithm’s configuration where improvements on the results can only be explained retroactively) is the scutwork of inference. Don’t waste time up-leveling this skill. They may pay you money to do this now, but big tech’s auto-ML methods are going to automate that skillset into gig work.

Spend time up-leveling inference skills where one uses domain knowledge and modeling assumptions (e.g., conditional independence) to constrain the inference algorithm.

Use computational Bayesian inference and amortize it with deep learning.

The Folk Theorem of Statistical Computing is usually true; when inference doesn’t work, the issue is probably your model and not the inference algorithm. That said, a good model shouldn’t have to compromise its quality for the sake of the inference algorithm.

On explanability, prediction, and truth

Explainability without prediction skews toward pseudoscience. Prediction without explainability skews toward superstition.

Just because math was involved doesn’t make it true.

Just because accuracy is high doesn’t make it true. Just ask Bertrand Russell’s dead chicken.

Social scientists, envious of natural scientists, pretend there is a “ground truth” model and try to find it. Instead, they should seek to write model software that works at least as well as the informal models that live in their wetware. Once separated from ego and cognitive bias, those models might become useful.

Humans suck at probability but have strong intuitions about causality. Statistical learning algorithms are the opposite. Thus, probabilistic models should constrain probability with causal explanations.

Combining explanatory hierarchical models with deep learning and computational Bayesian inference eliminates the trade-off between explanation (goodness-of-fit) and prediction (out-of-sample accuracy) while still constraining complexity.

It is better to have assumptions explicit in the model rather than in the labeling of the data or the training procedure. Sometimes you need to do the latter, but often people do it only because it helps hide hard-to-defend assumptions.

It is better to constrain complexity with structure from the problem domain rather than arbitrary penalties, regularizers, and training hacks. “Number of parameters times log N” and “dropout” are weird ways to conceptualize Occam’s razor.

One randomness, probability, and decision-making under uncertainty

Model not on the data, but the process that generates the data.

The process that generates your data is probably deterministic unless you are modeling quantum phenomena or chaotic behavior (most likely, you aren’t).

Thus, the reason to have random elements in your model is that your limited view into the data-generating process creates uncertainty. If you were Laplace’s all-knowing demon, you wouldn’t need probability.

It is better to combine uncertainty with the stakes of model-based decisions using Bayesian decision theory.

Machine learning researchers tend to focus on prediction accuracy. But predictive accuracy rarely captures the true stakes of a prediction-based decision.

If your colleague has some non-Bayesian way of making model-based decisions, there’s a Bayesian approach that is at least as good (and probably easier to understand).

Data drives predictions, which drive decisions, which drive future data. The feedback loop can cause big errors.

The true data-generating process doesn't lay awake at night worried about whether it’s generating data sufficient to serve your career goals.

The holy grail of AI is not inferring objective truth. It is inferring subjective truth as well as humans but without systematic errors due to cognitive biases.

All inference problems (including prediction) require some form of inductive bias. Pure empiricism isn't real.

On how to use deep learning without deifying it

A few millennia worth of philosophy about induction, prediction, and epistemology that were not suddenly invalided by the combination of backprop with GPUs.

For example, some might think supervised deep learning on big data is “pure empiricism.” But philosophy shows how the labels themselves could get you in trouble. See grue and bleen.

Some things cannot be learned even with infinite data. For example, given X you can’t predict Y and Z when X = Y + Z. Of course, if you have an fuzzy problem description, you can pretend like this isn’t happening.

Some people believe in deep learning’s ability to pick up on structure in the world that humans have yet to name. This belief has a Satanic Verse problem; divine truth is mingling with infernal deceit (spurious statistical patterns), and there is no way of telling which is which (until it’s too late).

Deep neural nets are amazing universal function approximators. You should be as specific as possible about the function you want to approximate.

Deep learning researchers often stumble upon architectures that work well, then retroactively explain why in terms of “invariances.” Hindsight is always 20/20.

End-to-end deep learning is a great place to start and a terrible place to finish. Use the brute force of end-to-end to show a solution exists, then search for a more symbolic, composable, and robust model that can achieve comparable results.

Don’t waste time trying to replace computer simulation with deep learning. Instead, use deep learning to enhance computational simulation by trading between compute and memory and doing deep approximate inference.

Help me find like-minded modelers (or enemy modelers!) by sharing this post.