Learning from Nassim Taleb trolling Nate Silver

plus, making money from prediction markets, and movements on AI ethics

AltDeep is a newsletter focused on microtrend-spotting in data and decision science, machine learning, and AI. It is authored by Robert Osazuwa Ness, a Ph.D. machine learning engineer at an AI startup and adjunct professor at Northeastern University.

Overview:

Negative Examples: What you don’t need to read and why.

Dose of Philosophy: Or, A Primer in Sounding Not Stupid at Dinner Parties: Profile of Karl Popper

Ear to the Ground: Governments and big tech scrambling to set standards on AI ethics. "Self-regulation is not going to work. Do you think that voluntary taxation works? It doesn’t." — Bengio

Startups You Could Have Started: Gong.io’s business model is painfully simple

Data-Sciencing for Fun & Profit: Decentralized prediction markets

The Essential Read: A high level view on the Twitter-war between Nate Silver and Nassim Taleb

Negative examples

Trending items that I’m not linking to and why

Reinforcement Learning is definitely en vogue (I’m working counterfactual policy evaluation in RL myself). Lots of tutorials are popping up on how to get spun up. Not linking because they will be there when you need them.

The Atlantic has a trending article about some wise guys who succeeded in selling GAN generated images at fancy auctions and in so doing trolling the art world muckety mucks. This is mostly huffing and puffing about “but that’s not real art!” This distracts from the far more interesting question — tools for generative art (eg Processing) are essentially tools for generative modeling that lack an inference algorithm, so how exactly could fixing that enhance human creativity? For more on this, checkout out Daniel Ritchie’s talk at ProbProg 2018.

Dose of Philosophy: Or, A Primer in Sounding Not Stupid at Dinner Parties

Karl Popper and falsifiability

Philosophy has been tackling the key problems of machine learning and data science for centuries. This section makes sure you are up to speed on the philosophical roots of your craft.

Karl Popper is best known for the view that science proceeds by “falsifiability” — the idea that one cannot prove a hypothesis is true, or even have evidence of truth by induction (yikes!), but one can refute a hypothesis if it is false.

Suppose Popper were building an ML model of some real world data-generating phenomenon. He would make it a causal model, so that it could predict the outcome of experimental interventions on that phenomenon. He would hypothesize a model, build it, and use it to predict the outcome of an experiment. He would then actually run the experiment and if the results didn’t match those predicted by his model, he would declare the model wrong and iterate.

What he would not do is focus on optimizing some function of likelihood or predictive accuracy. We all know really wrong models can predict really well.

The problem with this approach of course is that there could be multiple models which have not yet been refuted by the experiments we’ve had the time and money to run. In his view, these models would be on equal footing, while most of us would favor the model that is more strongly supported by the experimental evidence. And of course, sometimes all we care about is prediction.

Popper’s ideas have been useful to me as a time saving tool both in work and life — I suspect there is a correlation between the falsifiability of an idea, and whether or not working on that idea will ever lead to something useful. Investor George Soros (the guy at the heart of a fascinating number of conspiracy theories) was Popper’s student at LSE, and he credits his investment success to this line of reasoning. That said, there is quite a lot of money to be made as a public intellectual or Youtuber peddling non-falsifiable memes based on, for example, George Soros.

Ear to the ground:

Miscellaneous happenings that ought to be on your radar.

Governments and big tech scrambling to set standards on AI ethics

Self-regulation is not going to work. Do you think that voluntary taxation works? It doesn’t. — Yoshua Bengio

In the wake of the collapse of Google’s AI ethics board, tech companies, as well as Washington, Brussels, and Beijing are competing to take the driver’s seat in setting global AI policy, and much rides on who gets it. The EU has published a set of ethical guidelines for "trustworthy AI" — a long wishlist of idealistic principles. Yoshua Bengio is pushing another set of ethical guidelines through Mila.

Why it matters: This is growing into something that will touch people’s careers. It also means there will be opportunities to become a thought leader or entrepreneur in this space.

AI Startups You Could Have Started

A weekly mini-profile of AI startups with (shocker) an actual revenue model.

Gong.io provides text analytics on transcripts of sales calls. That’s it — a simple yet powerful idea. Upon hearing it, your average data scientist can immediately think of basic NLP methods for predicting conversions down a sales funnel, and imagine what the ETL-pipeline should look like. Perhaps you already thought of this.

Data-Sciencing for Fun & Profit:

Data-Sciencing for Fun & Profit examines emerging trends on the web that could be exploited for profit using quantitative techniques.

Decentralized prediction markets provide opportunities for enterprising forecasters

Prediction markets are exchange-traded markets created for the purpose of trading the outcome of events. These are typically events in politics, sports, the box office, etc not covered in derivatives markets. Prediction markets “predict” event outcomes through the price, set by traders being long or short on the event outcome. Popular online markets include Predictwise and Predictit.

There is an emerging cohort decentralized prediction markets relying on blockchain technology. Augur and Gnosis are two of such platform growing in adoption and community. The proposed benefit of decentralizing prediction markets is that they will open up a long-tail of events to trade on. Alternatively, they could open up markets typically limited to an exclusive set of elite investors, for example Flux.market proposes to allow people to short startups.

Should I care? Yes, if you are a talented forecaster, or you have professional knowledge or analysis tools relevant to the domains covered on these politics (eg. you work in commodities, utilities, politics etc). My gut tells me a bit of domain knowledge and/or empirical methodology could do well in a competition with early adopters from the crypto-bro culture. But beware, like other crypto applications it is still quite “Wild West”, i.e lots of opportunity and lots of danger.

The Essential Read: Key takeaways from the Twitter-war between Nate Silver and Nassim Taleb

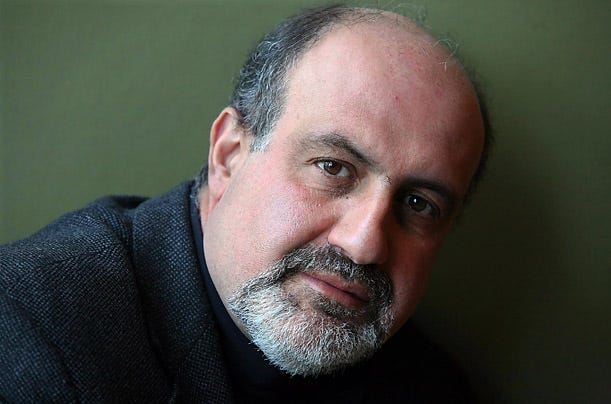

Nate Silver is the election-forecasting statistician founder of Fivethirtyeight.com. Nassim Taleb is a former options-trader and author of Black Swan, Antifragile, and Skin in the Game. Taleb likes to talk shit, especially on left-leaning intellectual elites. Thus, this Twitter war was mostly flame and so perhaps you ignored it. That said, there are some interesting takeaways relevant to anyone who thinks hard about how to build models and communicate their output.

Here is a summary:

Nate Silver’s election predictions are based on Monte Carlo simulation from a probabilistic generative model of the real world (conditioned on polls, demographic data, etc). The value reported for a possible election outcome is the percent of times simulation from that model resulted in that outcome.

Model building (even when informed by data) is a highly subjective endeavor. That’s fine, but most journalists, many of whom see Silver as some Delphic Oracle, do not realize this. They see only the statistical uncertainty the forecast by way of its reporting a Monte Carlo point estimate of a probability. They do not see any representation of uncertainty about the model and what it might be getting wrong about the world.

Finally, Taleb (who you’ll recall was a options trader) takes a skin-in-the-game perspective where the better way to evaluate a forecast is by betting cash on it, in which case you’d care about the volatility of that forecast over time, not just its value on election day. Volatility partially reveals model uncertainty. For example, failing to account key current causes of future event outcomes leads to more fluctuation due to a latent confounder.

Inherent stochasticity. But a key source of volatility will be how often “black swan” events (eg 2nd FBI investigation of Clinton emails) inject a tremendous amount of stochasticity relative to, say, sports forecasts. But since sporting events happen more often than elections, it’s tempting to think that FiveThirtyEight’s performance in forecasting sporting outcomes extends to its election forecasts.

Reporting probabilities vs “skin in the game”: The key criticism of Silver is that his reputation as a forecaster doesn’t depend on this volatility. Journalists will just take the outcome with the highest probability on election day as his prediction. If that prediction is right, he wins. If that is wrong, then he still wins by saying (rightly) that’s just how probability works — the event with the second highest probability occurred. In fact he looked extra good in 2016, despite given Clinton the higher probability, since his Trump probability was higher than that given by other forecasters. This means he has no skin in the game as Taleb would say.

Final thoughts. Apropos of the prediction market stuff mentioned above, wouldn’t it be interesting if FiveThirtyEight were to take positions in those markets and publish them on their website alongside their predictions?

Why you should care about the Nate Silver vs Nassim Taleb Twitter war

Nassim Taleb’s case against Nate Silver is bad math — Nautilus

Taleb, N.N., 2018. Election predictions as martingales: an arbitrage approach. Quantitative Finance, 18(1), pp.1-5.

Sensitivity analysis of election poll correlation — Cross Validated