Are metrics ruining AI? A view from econometrics.

How the idea of surrogate metrics can bring sanity to the metrics controversy

AltDeep is a newsletter focused on microtrend-spotting in data and decision science, machine learning, and AI. It is authored by Robert Osazuwa Ness, a Ph.D. machine learning engineer at an AI startup and an adjunct professor at Northeastern University.

This is a special issue on the topic of metrics in AI. I introduce metrics, why they matter, and how they can harm. I introduce a concept from econometrics and causal inference that helps one understand when metrics are useful and when they are harmful. I pay special attention to a natural language understanding (NLU) metric called SuperGLUE.

Ideal Audience: This is a must-read for AI product managers, investors, entrepreneurs, and anyone else who has skin-in-the-game when betting on the success of an AI product, especially in NLU.

Teaching to the test: the problem of metrics in machine learning

Metrics are the things you measure to evaluate how well you are doing with respect to some goal or strategy. Typically the goal or strategy is long-term, while the metric gives you actionable feedback in the short-term.

The Harvard Business Review recently published an article on how metrics can undermine your business when you "replace strategy with metrics."

The authors give a compelling example of Wells Fargo’s cross-selling shenanigans. Wells Fargo’s long term goal is to have a deeper relationship with customers. They decide that cross-selling is a good short-term metric for quantifying how well they are performing on that long-term objective. They incentivized salespeople based on this metric, and so salespeople gamed the metric by setting up accounts not authorized by customers. This of course created a huge reputation loss that hurt their long-term goal of having a deeper relationship with customers.

MITSloan Management Review fired back at this article with the opposite argument.

They argue that adverse outcomes due to metrics are not because of the metrics themselves, but because of inept leadership who select poor metrics or otherwise fail to give them much thought. The point out the big tech companies as the gold standard for ideal use of metrics because they test metrics with experimentation, and incorporate metrics into algorithms that learn from data.

However, Rachel Thomas, director of the USF Center for Applied Data Ethics and founder of fast.ai, points out the myriad problems that occur, both for the company and for society, when big tech companies algorithmize metrics.

She gives an example of this lets one game algorithms the same way Wells Fargo employees gamed a metric. She describes how Russia Today’s Mueller Report video got far more “Up Next” recommendations than any other Mueller Report video. Somehow the Russians had figured out the metrics that drive YouTube recommendations and gamed them.

She also points out how the pursuit of the wrong metric can lead to problems. Thomas provides an example of how an algorithm that predicted risk factors for stroke ended up merely predicting whether someone was privileged enough to make use of healthcare services. Privileged means, for example, being able to take medical leave and arrange childcare so one can focus on healing.

So who’s right? If there are good metrics, how do we find them and build them into our experimentation platforms and algorithms in a way that moves us toward our long term objectives and does not cause harm?

Resources

Don’t let metrics undermine your business — Harvard Business Review

Don’t Let Metrics Critics Undermine Your Business — MIT Sloan Management Review

The problem with metrics is a big problem for AI — fast.ai blog

Online interfaces to cutting natural language understanding models draw attention to metrics

Machine learning Twitter has had some back-and-forth regarding metrics in the context of NLU (natural language understanding).

Much of this has been due to published online interfaces to cutting edge NLU models like GPT-2.

Some call these “deep learning-based” models, and what they mean is that they rely on pre-trained word embeddings and an end-to-end deep transformer network architecture. I mention this because radically different systems could still make use of deep learning in their components. Here I call these models transformer models.

It turns out it is not only easy to come up with examples that demonstrate how poor these transformer models are at common sense reasoning, it is also fun to take screenshots and share them in social media. Like this one:

This kind of math example is popular for several reasons:

It highlights an apparent failure of AI to do the kind of commonsense math an infant can do. It is also easy to come up with examples that demonstrate more nuanced but meaningful failures in commonsense reasoning.

It is easy to understand why the failure occurs, given even a basic understanding of how these kinds of models work.

It is also easy, with minimal coaxing, to get the algorithm to spit out racist, sexist, and sexually explicit content. These are provocative examples of these algorithms' bias.

So this begs the question, can we define NLU metrics that encourage progress in overcoming these failures?

Resources

Talk to Transformer (used to generate the example above)

The SuperGLUE metric for NLU

What is SuperGLUE?

SuperGLUE is a metric for NLU performance.

SuperGLUE is a group of “tasks.” Each task is like a standardized test that evaluates a different aspect of natural language understanding. Like most standardized tests, the tests multiple choice or true/false answers. An NLU algorithm takes these secret tests through a website, and for each test, the website scores the algorithm's with performance stats like accuracy and F1. The SuperGLUE metric itself is an average of these scores. An algorithm can only take the test a limited number of times each month, to avoid people from simply tuning the algorithm using the metric. A human-performance comparative baseline was established by recruiting human “test-takers” through Mechanical Turk and averaging their scores.

Who’s behind SuperGLUE?

Facebook and Google DeepMind.

How well do the transformer models perform on SuperGLUE?

The transformer model does surprisingly well on some questions that seem to require logic and common sense.

The reference paper for SuperGLUE includes results for the transformer model BERT. BERT beats all the other reference models on every task, so it is only meaningful to compare it to the human reference point.

BERT does well on two related tasks that seem to me to require a bit of commonsense reasoning ability. The first is a task (called recognizing textual entailment (RTE)) that pairs a paragraph with a hypothesis about the contents of that paragraph. The NLU system has to decide whether or not that hypothesis logically follows from the paragraph. In other words, it asks whether a paragraph entails a given hypothesis. For example:

Text: Dana Reeve, the widow of the actor Christopher Reeve, has died of lung cancer at age 44, according to the Christopher Reeve Foundation.

Hypothesis: Christopher Reeve had an accident.

Entailment: FalseTransformers got a 79% accuracy to a human’s 93.6% accuracy.

The second task (called the commitment bank (CB) task) is like the previous, except a variation of the hypothesis is embedded as a clause in the paragraph. The NLU system must decide how much the speaker is committed to the truth of the clause. For example, in the following text, the speaker asks if they are “setting a trend”, but it is unclear whether they are indeed setting a trend.

Text:

B: And yet, uh, I we-, I hope to see employer based, you know, helping out. You know, child, uh, care centers at the place of employment and things like that, that will help out.

A: Uh-huh.

B: What do you think, do you think we are, setting a trend?

Hypothesis: they are setting a trend

Entailment: UnknownBERT got 90.4% accuracy to the humanity’s 98.9% accuracy.

The transformer model does poor on other types of commonsense reasoning tasks.

One of the tasks BERT performs poorly on relative to humans is the Winograd Schema Challenge (WSC) task. This is a coreference resolution task, where examples consist of a sentence with a pronoun and a list of noun phrases from the sentence. The system must determine the correct referent of the pronoun from among the provided choices. For example:

Text: Mark told Pete many lies about himself, which Pete included in his book. He should have been more truthful.

Coreference: Do "Pete" and "He" refer to the same entity? (False)Here, BERT got a 64.3% accuracy to humanity’s 100% accuracy.

Bottom line. SuperGLUE is a response to a problem with the previous metric GLUE, where the gap between humans and machines was quickly closed after the metric was released. In the case of the recognizing textual entailment (RTE) task’s 79% accuracy, that home stretch of 14.6% to a human’s 93.6% accuracy might be much more difficult than attaining that initial 79%. Or maybe not. Below I link to an article by Abhijit Mahabal that deep-dives into the problems with RTE.

Big picture — Some researchers and startups cry foul

Some researchers criticize SuperGLUE as being biased towards algorithms like transformer models that are good at doing text-search. Performant text-search performance and achieving natural language understanding are not the same things. They argue that focus on these metrics hurts progress in NLU.

One of the more convincing arguments is that SuperGLUE lacks questions that press hard on mathematical and logical reasoning, much harder than the CB, WSC, and RTE tasks mentioned above. Such questions might look like:

Question: I had six pies but gave one whole pie and a half of one pie to Sam, so now I have ____.”

Answer: four and a half piesQuestion: My name is Ruger and I live on a farm. There are four other dogs on the farm with me. Their names are Snowy, Flash, Speedy, and Brownie. What do you think the fifth dog's name is?

Answer: RugerNote that these could easily be refactored to be multiple choice.

This matters not just to science, but to business.

Cutting-edge transformer models take Google and Facebook-level resources to train. If SuperGLUE metrics are biased towards models that these types of models, then they are biased against scrappy startups who might disrupt the space with some radically new algorithm. What should they say to the VC or client who asks how well their algorithm scores on SuperGLUE?

Resources

Wang, A., Pruksachatkun, Y., Nangia, N., Singh, A., Michael, J., Hill, F., ... & Bowman, S. R. (2019). Superglue: A stickier benchmark for general-purpose language understanding systems. arXiv preprint arXiv:1905.00537.

AI researchers launch SuperGLUE, a rigorous benchmark for language understanding — VentureBeat

Do NLP Entailment Benchmarks Measure Faithfully? — Abhijit Mahabal on Medium

What econometrics and causal inference has to say about metrics

So what we have with SuperGLUE is the metrics problem mentioned in the HBR article. The long-term objective is achieving human-level performance in NLU. The metric is SuperGLUE. Some attest that the metric is biased towards outcomes that are different from achieving good NLU, such as improved search algorithm performance for companies like Facebook and Google. Researchers who realize all they need to get a paper accepted to a top AI conference is to get a high SuperGLUE score will game the metric the way Wells Fargo employees gamed the cross-selling numbers.

There is some science on this. Econometrics (and other social sciences like epidemiology) have a concept called the surrogate metric paradox. When you have a metric that gives you more immediate feedback on a long term objective, it is called a surrogate metric. The surrogate metric paradox is what happens when optimizing the surrogate metric hurts your long term objective.

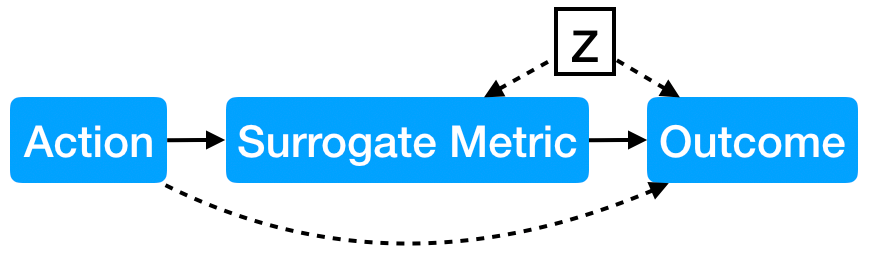

Causal inference provides an excellent intuition on this paradox using directed graphs. It turns out that surrogate metrics work when the path of causality flows as follows:

Here an action is a cause of the surrogate metric, and the surrogate metric is a cause of the outcome.

However, the surrogacy paradox can bite you when causality flows as follows:

There are two cases here, either of which could cause the paradox. In the first case, there is an alternate path from action to the outcome that goes around the metric. In the second case, the metric and the outcome have some unobserved common cause (“Z” in the figure).

The first case is where your action directly hurts the outcome but gets you a high value for the metric, as with Wells Fargo’s cross-selling. This would be machine learning researchers focusing on models that only do push the envelope on text search and get them more publications, but pull AI research away from true NLU outcomes. An example of a true NLU outcome would be having a coherent open-ended conversation with a chatbot, like in the film Her.

The second case is more subtle, but important. Rachel Thomas’s example of predicting risk factors for stroke is an example of this phenomenon, because both the metric and the outcome were influenced by the latent common cause of wealth.

The latent common cause with SuperGLUE metric and the NLU outcome could be some natural bias in how human language understanding works. Suppose that human language understanding is mostly recognizing patterns in words and syntax, along with a tiny but fundamentally important bit of high level reasoning about abstract concepts. This would suggest that if the metric over-weighted the patterns and under-weighted the abstract reasoning, you’d incentivize algorithms that were good at learning the former and poor at the latter.

Alas, I think that is exactly the case with transformer models and SuperGLUE.

Resources

VanderWeele, T. J. (2013). Surrogate measures and consistent surrogates. Biometrics, 69(3), 561-565.

Thanks for reading. Why not share this post with a colleague or friend who would read this and think I’m full of crap and scream at me on Twitter?