Cognitive Bias & Deep Learning Bro-dom

The typical machine learning playbook and why it sucks.

The following is a snippet from Altdeep’s workshop Refactored: Evolve Beyond a Glorified Curve-Fitter. Get notified when enrollment for the workshop reopens.

The Typical Machine Learning Playbook

The machine learning playbook is how engineers often tackle a real-world problem with machine learning.

The standard playbook has four steps:

Take the specific problem we are trying to solve and map it to a “task” or “tasks.”

Find and learn the most popular library or state-of-the-art deep learning architecture for the task.

Swap out that public data for our problem data and munge it until it fits the algo.

Apply hacks and rules-of-thumb to get training to work.

1. Take the specific problem we are trying to solve and map it to a “task” or “tasks.”

Step one of the playbook is to take the real-world problem we are trying to solve and map it to a "task" or "tasks." When I say the word "task," I mean a canonical statistical modeling task or machine learning task. These are categories for published research. Examples are "image classification" or "text summarization" or "time series forecasting."

2. Find and learn the most popular library or state-of-the-art deep learning architecture for the task.

The next step is to find and learn the most popular library or state-of-the-art deep learning architecture for the task. We'll Google around trying to see what the state-of-the-art is or what the most popular tools are. We'll then work through an online tutorial on how to use the tool. This tutorial will typically be using some commonly-used data set, like MNIST.

3. Swap out that public data for our problem data and munge it until it fits the algo.

In the next step, we swap out that public data for our problem data and munge that data until it fits the algorithm. For example, suppose we have a computer vision problem, and the tutorial uses Imagenet data. We'll go into the tutorial code and switch the Imagenet data for image data from our organization. However, that usually isn't enough. We'll often need to munge the data until it we can get it working with the algorithm. We transform, shift, or rescale the data. If it is continuous, we might discretize it. If it is discrete, we might "relax" it into continuous data. We might upsample particular subsets of the data, or otherwise augment the data. We do whatever we need to do to get the algorithm to train.

4. Apply hacks and rules-of-thumb to get training to work.

In the last step, we apply hacks and rules-of-thumb to get training to work. We try various tweaks of the hyperparameters of the algorithm to get the performance we want.

Problems with this approach: The Law of the Instrument

So what's wrong with this playbook? If we can get good accuracy on test data, then what exactly the problem?

There is nothing wrong with the subtasks in each of those steps. The problem is that the overall workflow encourages a kind of cognitive bias.

That bias is called the "Law of the Instrument." It is also known as the "law of the hammer," Maslow's hammer, or the golden hammer. It is a cognitive bias that involves an over-reliance on a familiar tool.

To quote psychologist Abraham Maslow, who coined this bias,

I suppose it is tempting, if the only tool you have is a hammer, to treat everything as if it were a nail.

Let's look at how this bias emerges at each step in the workflow.

1. Take the specific problem we are trying to solve and map it to a “task” or “tasks.”

So in step 1, we take the specific problem we are trying to solve and map it to a "task" or "tasks." We are shifting focus from a context-specific problem that we are trying to solve, to a broad problem defined by a research community. When we do this, it becomes easy to favor performance metrics from machine learning research over the key performance indicators relevant to our context. We can summarize this by saying we are biasing ourselves away from solutions that are specific to our problem's context, towards solutions to problems that are framed by people who are trying to publish. That doesn't mean it will lead to a bad result. We just need to be aware that we may be losing something in translation. Model-based machine learning seeks to avoid doing this translation from context-specific to general.

2. Find and learn the most popular library or state-of-the-art deep learning architecture for the task.

Next, we find the most popular library or state-of-the-art deep learning architecture for the task. When we do this many times, we get good at applying these libraries and using these architectures. The problem is, the goal is not to get good at using libraries.

Firstly, we should consider that large tech companies are behind these libraries. These companies are trying to build developer ecosystems. When a company pulls you into their ecosystem, you know you will not be that ecosystem's apex predator.

Secondly, you have to spend a lot of time trying to gain mastery of a library. If you work with TensorFlow, PyTorch, or another deep learning library, you know it takes a while before you get good at deciphering those cryptic error messages.

To be clear, getting good at using libraries and various architectures is necessary to be good at what we do. The point is that getting good at using libraries is not the objective. It is a means to an end.

When we make it the objective, we start commoditizing ourselves. The same way freelancers on websites like Upwork have commoditized themselves.

The reason Google and Facebook outsource tools like TensorFlow and PyTorch is because they want to commoditize the skillset. They put tremendous effort and resources into devalueing the work you are putting in to get good at using these tools.

Our goal is to become better modelers, and getting good at libraries is a means to that end.

3. Swap out that public data for our problem data and munge it until it fits the algo.

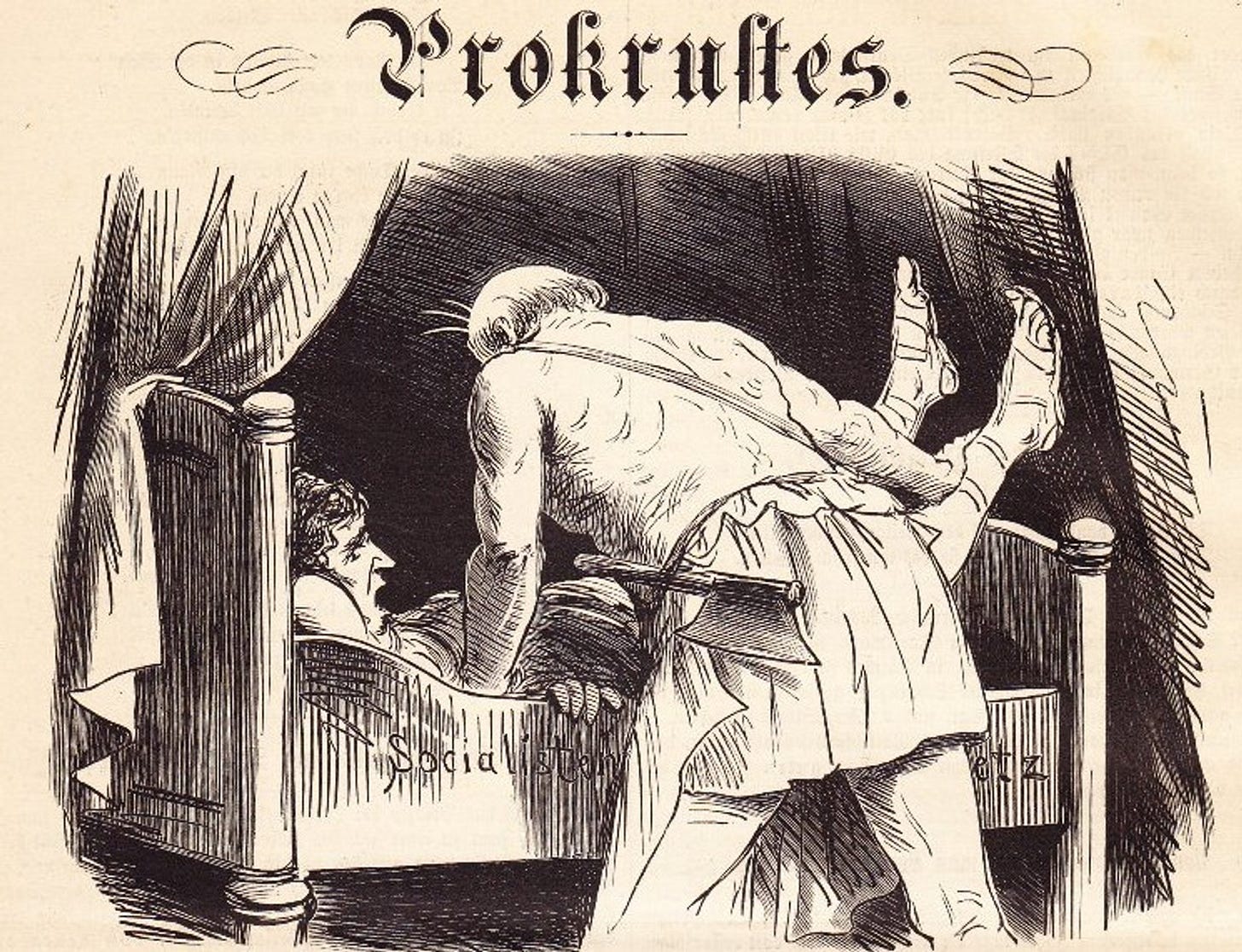

In step 3, we swap out that public data for our context-specific data and munge it until it fits the algorithm. The focus on munging can lead to a Procrustean bed problem.

Procrustes was a psychopathic innkeeper from Greek mythology. He mutilated the bodies of his guests to make them suitable for his guest bed. If they were too short for the bed, he would lengthen them on a stretcher. If they were too tall, he would take an ax to their legs.

Our mutilation of the data to suit the popular library or state-of-the-art algorithm is analogous to Procrustes' mutilation of the guest to suit his guest bed.

I can point you specific cases where mutilating data removes information and therefore impacts performance. However, I want to focus on cognitive bias. The focus on munging the data to suit the algorithm is another way we shift our thinking away from the problem and its context. Rather than finding the right tool, we're trying to turn the data into a nail that will work with our hammer.

Why not find a bed that suits the guest? Why not build a bespoke model that suits the problem and the data?

That's the heart of the model-based machine learning philosophy.

4. Apply hacks and rules-of-thumb to get training to work.

As the last step, we apply hacks and rules-of-thumb to get training to work. The problem is that these hacks and rules-of-thumb don't give us insight into the problem we want to solve.

In deep learning, changes to hyperparameter settings and architecture choice can lead to drastic differences in performance.

If you are familiar with deep learning, you'll know that changing something like how you round off numbers or changing from CPU to GPU can significantly impact performance. But choices like these have nothing to do with the problem domain.

In terms of architecture, consider the choice between GRUs and LSTMs in sequence modeling. If you don't know what those are, don't worry, suffice to say they play the same role in deep learning architectures. Which one is better? Andrew Ng's deep learning course says LSTMs are a good default choice because they are more popular, while GRUs are simpler, therefore easier to train. That's true, but those are technical considerations. It's like considering nail-hammering technique. There is no connection to the underlying problem you are trying to solve.

Case Study: Bloomberg Boxes and Deep Learning Bros

The finance industry has a famous example of the "law of the instrument" cognitive bias. This is the traditional UI for a Bloomberg financial workstation (AKA, a Bloomberg Box).

If you are thinking, "it is hideous," many others agreed. That is why In 2007, Bloomberg contracted the design consultancy IDEO to do a complete redesign of this UI. This is what they came up with:

From IDEO's report:

IDEO created an interface that presents information in a logical progression, with news and data displayed left to right in order of increasing specificity. As users dig deeper, more cascading information panes are added to the right of the screen that can be moved using a touchpad.

Despite the objectively better layout from a celebrated design consultancy, financial analysts utterly rejected the design—the reason for the rejection is surprising.

The difficulty of using the old UI meant financial analysts had to learn how to navigate it. The bad UI created a barrier of entry that protected their jobs.

For these Bloomberg bros, the old UI also served as a status symbol -- it communicates to others "I know how to use this thing, so that proves I am very smart."

DL bros are the new Bloomberg bros.

Despite their predictive achievements, deep learning architectures are brittle and hard to train, scale-up, and maintain in production, and are a huge source of technical debt.

Just as with the Bloomberg bros, this has lead to a culture of deep learning bro-dom.

Deep learning bros are engineers whose value is their rare technical ability to build and maintain deep learning models. This technical skillset defines them as professionals. They have become something akin to platform engineers, people whose job it is to maintain the technology stack. They are not in the meetings that discuss business needs and strategy. They are too busy with smart deep learning stuff to worry about your MBA-problems.

Being an DL bro, weighted by the "law of the instrument" cognitive bias, is not a robust career strategy. If you Google around, you will read about waves of layoffs of machine learning engineers in well-known companies. It is folly to assume that demand for a specific set of technical skills in AI will persist without change.

A more robust strategy is to be plugged into the strategic discussion in your organization, and understand how to build machine learning products that meet those specific strategic needs.

Deep learning is still awesome.

This is not a repudiation of deep learning. Universal function approximation is epically useful. Every data scientist and machine learning engineer should study deep learning in-depth and experiment with its applications. This lecture is instead a repudiation of the deep learning bro culture that has grown around the complexity of getting deep learning to work. My message to you is that rejecting that culture in favor of a model-based machine learning approach will lead to a more fulfilling and ultimately less risky career trajectory.

Like what you read here? Share this article. Hate it? Share so you can have a laugh with someone else just how supremely clueless I am.

This is so good