How Stranger Things can make you smarter about OpenAI's GPT-3 language model

The Mind Flayer reveals what GPT-3 can and cannot do.

OpenAI’s GPT-3 continues to generate press as developers learn to build new apps on top of it.

I want to present a thought experiment that I hope helps characterize what exactly GPT-3 can and can’t do.

This thought experiment is a Stranger Things version of a thought experiment created by Emily Bender and Alexander Koller (starts at the 5:44 mark). The original version is probably more concise, but mine is cooler because I use 80’s pop culture.

Thought Experiment: Dustin, Suzie, and The Mind Flayer

Two teenagers named Dustin and a Suzie are in love. They maintain a long-distance relationship over CB radio.

Dustin has an adversary, a lawful evil extra-dimensional octopus-headed being called a Mind Flayer. The Mind Flayer wishes to enter the human dimension and subjugate the humans, but Dustin and his allies have foiled its attempts.

The Mind Flayer needs to defeat Dustin and his allies. However, it knows nothing about our world except that it contains teenagers like Dustin and other types of humans. So, to gather enemy intel, the Mind Flayer eavesdrops on the CB radio communications between Dustin and Suzie.

After listening long enough, the hyper-intelligent Mind Flayer performs an extremely sophisticated statistical analysis of the numerous conversations shared between Dustin and the Suzie. It uncovers statistical patterns between word usage far more nuanced and complex than any mere human algorithm could hope to capture.

Finally, the Mind Flayer uses its otherworldly abilities to block the transmissions between the couple. It then pretends to be Suzie in an effort to deceive Dustin.

The question is, can the Mind Flayer successfully deceive Dustin into thinking that it is Suzie? Whether or not the Mind Flayer can do this depends on what Dustin wants to discuss.

Construx, Autobots, and meaning

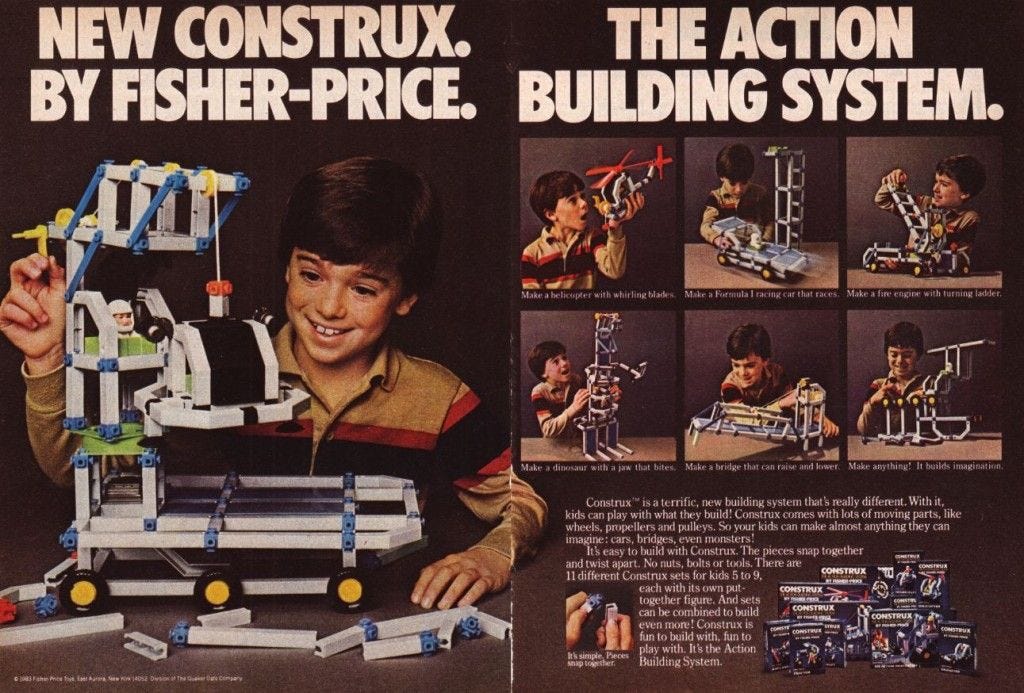

Previously the couple has had a lot of social conversations about their various STEM-related hobbies. So when Dustin says he made a robot from a kit, the Mind Flayer can say, “Wow, cool! You made an electronic man! You know what? I made a geodesic dome out of Construx!”

“Electronic man” is a good colloquial definition of “robot”. Talking of “geodesic domes” and “Construx” is very much on theme. The Mind Flayer’s analysis revealed a pattern between such words in previous conversations between the couple. It then used that pattern to generate this response. Dustin accepts the response as meaningful and doesn't suspect anything. The sophistication of the response and the successfully deceiving Dustin demonstrates the Mind Flayer's advanced statistical learning abilities.

Now, suppose that Dustin is excited because he has learned how to construct a DIY version of Optimus Prime that can transform automatically. He wants to explain to Suzie how she can make her own DIY Autobot using the materials she has on hand. He asks the Mind Flayer, who he believes is Suzie, for her thoughts.

Now, the Mind Flayer will have trouble coming up with a technically meaningful response. The Mind Flayer knows that words like “construx,” “kit,” “robot,” and many others have a subtle pattern of co-occurrence. However, these words cluster together because the couple is talking about "hobbies." To say actionable things about “hobbies,” the Mind Flayer would need to understand human psychology and how humans physically interact with their world.

Worse yet, the Mind Flayer’s dimension has eldritch physics that is fundamentally different from the physics of the human’s dimensional plane. So the idea of constructing a toy may be utterly unfamiliar. Indeed, the idea of robots that transform shape (and why that’s cool) relies on the human world's physics and human culture to make any sense.

If it were truly Suzie, she would ask Dustin questions that gave her a high-level idea of how she would go about constructing the Autobot. The Mind Flayer, lacking the cultural and physical frame of reference, cannot articulate such questions.

So instead, the Mind Flayer will fall back on those subtle statistical conversational patterns it has learned. In the past, when either party said things like “I have an idea for a cool project, here is how to do it... What do you think?” the other party said something like “Oh my gosh, this is so cool! I can’t wait to try it out!” If this is what the Mind Flayer says back to Dustin, it's not inconceivable that Dustin will accept this as a meaningful response from Suzie. However, that is not because the Mind Flayer had meaning it was trying to communicate, it couldn’t. Rather, Dustin is projecting meaning onto the response, based on his beliefs and experiences with Suzie and other humans.

Finally, let’s raise the stakes. Suppose Dustin asks the Mind Flayer (who is posing as Suzie) to help construct a novel device to hack the computer systems within a high-security Russian military facility. He needs this information immediately so he can help his friends. He’s getting some engineering fundamentals wrong and needs Suzie’s help.

Now the Mind Flayer is in trouble. As before, it lacks the human frames of reference needed to inject actionable meaning into its response to Dustin. Falling back onto a statistical answer like it did previously would lead to Dustin detecting the deception. There is no response the Mind Flayer could give based on statistical knowledge alone that the boy would find meaningful, given the stakes.

Sidebar! If you like this post please share.

GPT-3 cannot understand. If it were more like the Mind Flayer, it could.

The thought experiment shows that algorithms like GPT-3 learn subtle statistical patterns driven by social and physical meaning. Those patterns allow them to generate grammatically perfect and coherent natural language. However, in general, they do not learn that meaning in a way that can be acted on when it matters.

To surpass these concepts, the learning agent needs a way to map these statistical patterns back to meaning in the human world. One way to do this by constraining the model with structure reflecting human world entities and relationshps, such as with graph neural networks.

Alternatively, the model could try to gain conceptual understanding directly from humans. The Mind Flayer learns from humans in Stranger Things. It possesses individual humans' minds, and in the process, it learns enough human psychology and social dynamics from its victims to successfully manipulate other humans.

To learn physics, and the learning agent should interact with the physical world. The Mind Flayer does this through its demogorgon henchmen and through its possession victims. Its Season 3 ability engineer monsters out of raw flesh suggests it learned a thing or two about the physics of the human realm.

Planck’s Constant and Luck Dragons

The Season 3 finale scene between Derek and Susie played out differently than in my thought experiment. Derek radios (real) Suzie and asks her for Planck’s constant, which is the password to a door in a Russian military facility.

The scene that comes next is a nearly fatal overdose of nostalgia for 80’s kids like me. Click here to watch. Trigger warning: references to Luck Dragons.

Anyhow, GPT-3 probably could have answered that question, since the phrase “Planck’s constant” probably tends to co-occur with the actual value of Planck’s constant. That said, I couldn’t coax Huggingface’s version of GPT-2 into giving me the value of Planck’s constant. GPT-2 is the previous generation of GPT-3. It is the same model but has far less parameters than GPT-3.

Planck’s constant is proportional to energy and inversely proportional to frequency if I remember correctly, but I think it depends on the units. Also, “the square root of the speed of light squared” is funny.

Thanks for reading. Please help me find new subscribers by forwarding to friends and colleagues.