How to be smart about algorithmic bias

A primer. Also, prediction markets provide insight into impeachment hearings

AltDeep is a newsletter focused on microtrend-spotting in data and decision science, machine learning, and AI.

Know an engineer, research scientist, AI product manager, or entrepreneur in the AI space? Forward this to them. They’ll owe you one.

They say politics is like sports for nerds. Nothing drives that home more than how the political bets people make in prediction markets. They provide unique insight into recent political events such as the ongoing impeachment hearing. See the section Data Sciencing for Fun and Profit below.

Ear to the ground - How to be smart about algorithmic bias: A primer

This week there was a social media storm about gender-bias credit offers from AppleCard. Based on initial reviews, the new credit card granted higher limits to men than to women.

Also last week, Chinese surveillance tech company Hikivision was caught explicitly marketing the ability to classify faces based on ethnicity and race.

支持分析人员目标的性别属性(男、女),支持分析人员目标的种族属性(如维族、汉族)以及人种肤色属性(如白人、黄种人、黑人);支持分析人员是否戴眼镜、是否戴口罩、是否戴帽子、是否蓄胡子,识别准确率均不低于90%

Translation: Supports analysis on target personnel's sex (male, female), ethnicity (such as Uyghurs, Han) and race-based skin color types (such as white people, Asians, or black people), whether the target person wears glasses, masks, caps, or whether he has beard, with an accuracy rate of no less than 90%. [emphasis added]

Get ready for a perpetual debate about algorithmic bias.

The following is a bit of a primer that with both help you see why this problem is not going away, as well as how to be clever about understanding the nature of the beast.

Direct, indirect, and spurious discrimination

Discrimination occurs when individuals receive less favorable treatments on based on a “protected" attribute such as race, religion, or gender. What attributes are considered to protected is a matter of society, law, and ethics. We can split discrimination into three classes: direct, indirect, and spurious.

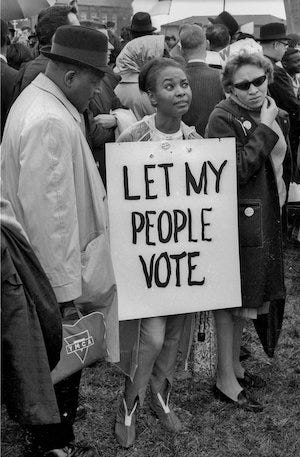

Direct discrimination occurs when individuals receive less favorable treatments on the basis of a protected attribute, such as restricted voting rights and giving unequal payment on the basis of race and gender.

Indirect discrimination occurs when treatment is based on factors that are somewhat related with, but not the same as, a protected attribute. An example is the historic practice of redlining, where financial institutions denied services based on where people lived, and the geographies that were denied services were correlated with concentrations of specific ethnic groups.

In some cases, the discriminator intentionally selects factors that will enable them discriminate against a protected attribute, while doing so in a way that gives them plausible deniability.

Spurious discrimination is not discrimination, but rather a statistical bias that looks like discrimination. For example, a top university engineering program may accept far more men than women. Suppose the following conditions were true:

The admissions staff were not directly or indirectly rejecting applicants based on gender.

A STEM background was required to be accepted.

There were lower rates of female STEM participation in society.

This combination would lead to a statistical bias against women, but it would not constitute discrimination.

Discrimination can become inadvertently automated

If there exists some decision process where human decision-makers engaged in discriminatory practices, and a machine learning algorithm was used to automate that process (by training it on data in logs), then the resulting algorithm will likely continue those discriminatory practices. Worse yet, it could amplify them through optimizing some objective function. Meanwhile, the humans who could have been held accountable for their discriminatory decision-making have been removed from the picture.

It is difficult to determine spurious discrimination from actual discrimination (even when the algorithm is not black box)

Hikvision’s explicit advertisement of race and ethnicity-based classification is an example of an algorithm intended to be used for direct discrimination (unless China merely wants to use this tech to conduct a population census, which is doubtful). But it is possible Apple’s credit card offers were an example of spurious discrimination.

Both actual and spurious discrimination show up as statistical bias, which is to say that observing a statistical bias in an algorithm’s outputs alone is not sufficient evidence of discrimination.

To do this, you need a causal model. A causal model could distinguish between actual and spurious discrimination by answering counterfactual questions like, “The credit offer algorithm gave her a credit limit of $5000, what would it have given her if she were a man?” A causal model can reason about indirect discrimination using mediation analysis.

However, causal models are problematic because we often don’t know the causal structure of the phenomena that create the data fed to the decision-making algorithm. You can make assumptions about that structure, but it might be difficult or impossible to validate those assumptions empirically. Some research tries to address this by estimating bounds on the amount of discrimination in the decision-making system.

People think discrimination == malicious intent. It doesn’t.

Discrimination naturally appeals to an underdog narrative where some malicious actor actively seeks to oppress some group. The problem with this narrative is that people tend to believe the absence of evidence of malicious intent is evidence of no discrimination.

This logical fallacy is the heart of the “machines can’t discriminate” argument — an algorithm is not capable of hate, so it can’t possibly hate black people, so it can’t want to discriminate against black people, so it can’t possibly discriminate against black people. Therefore if the algorithm is statistically biased against black people, it is either spurious discrimination or deep learning has magically constructed some truth about black people that our PC culture finds unpalatable. The fallacy here is that malicious intent to harm the protected attribute is not a necessary condition of discrimination.

You can easily imagine a phenomenon where most people grew up with a culturally-defined Platonic ideal of a “mother” that looks like this:

Those folks then go and use the Internet, building Web links between the word “mother” and “mom” and images that look like Carnation mom. So when one searches the word “mother” in an image search engine, the algorithm finds a stronger statistical association between the search term and images like Carnation mom than images like this:

Implicitly priming image search with a preference for the Carnation mom is an example of direct discrimination encoded into an algorithm. However, the Internet users who were individually building up that statistical preference for the Carnation Milk mother weren’t actively thinking “I’m clicking this link because I want to attack working mothers of color by making them be underrepresented in image search results 😡!” Discrimination sans malicious intent.

Bottom line: this problem is hard to understand, much less solve

Think about this:

A major tech company can produce and algorithm that wouldn’t discriminate in controlled settings but reproduces statistical biases due to deeper problems in society when people use the app in uncontrolled settings.

Bad things will happen even when everyone has good intentions. Absence of bad intentions is not evidence that nothing bad is happening.

It is not generally possible to tell using statistical evidence alone if an algorithm is discriminating directly, indirectly, or just reflecting the underlying statistical biases in the processes that generated the data.

Resources

Zhang, J., & Bareinboim, E. (2018, April). Fairness in decision-making—the causal explanation formula. In Thirty-Second AAAI Conference on Artificial Intelligence.

Hikvision product page via Wayback Machine

Data Sciencing for Fun and Profit

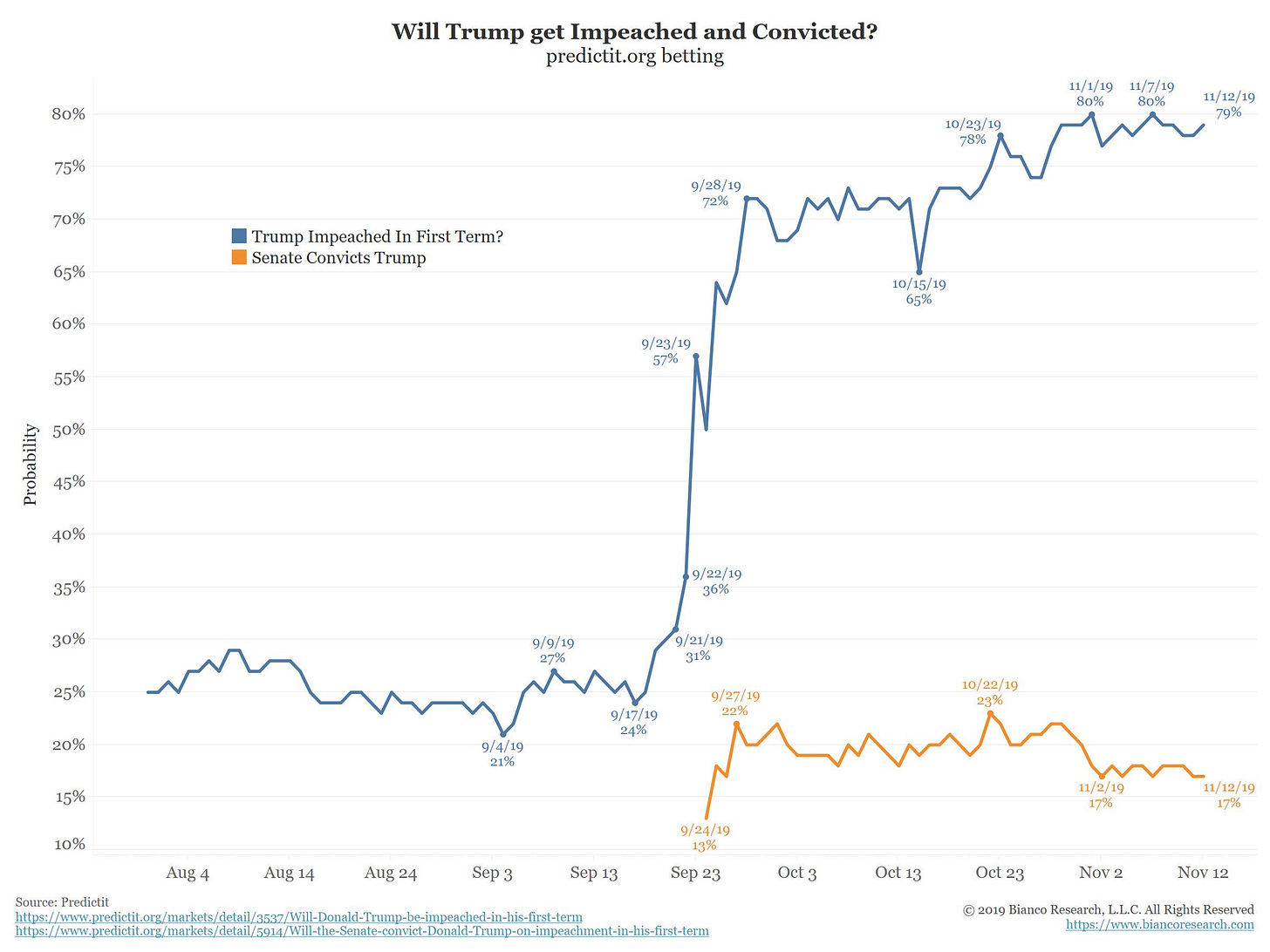

Prediction markets provide insight into impeachment hearings

The odds for impeachment have been steadily increasing since Nancy Pelosi announced the inquiry. The odds that the Senate would convict upon impeachment (resulting in removal from office) remain long.

This initial week of hearings was probably disappointing if you are in the betting markets. There was no new information, or shocker soundbites to suddenly add the volatility that seasoned betters profit from.