Quantum Bayesianism

Another nail in scientific objectivism's coffin?

Announcement

This week I’m opening up a new course at Altdeep called How to Evolve Beyond Glorified Curve-Fitter. The one-sentence pitch is this.

The machine learning community is obsessions with hacks and tricks to get deep learning to work, and is mired-down in magical thinking about what deep learning can do. This course helps MLers escape that mental bog with a mental refactor, using ideas from Bayesianism, Shannon’s information and communication theory, decision theory, and an examination of inductive biases in deep learning.

Enrollment opens tomorrow. Click below to get the enrollment opening notification email, or ping me on Twitter.

Ear to the Ground

Quick bites

The Cost of AI Training is Improving at 50x the Speed of Moore’s Law: Why It’s Still Early Days for AI — Ark Invest

Some perspective on the high absolute costs of training a deep learning model that shows the marginal costs are getting lower.Understanding Bayes: How to Become a Bayesian in Eight Easy Steps —The Etz-Files

A reading list.The Value of Thinking about Varying Treatment Effects — Andrew Gelman’s Blog

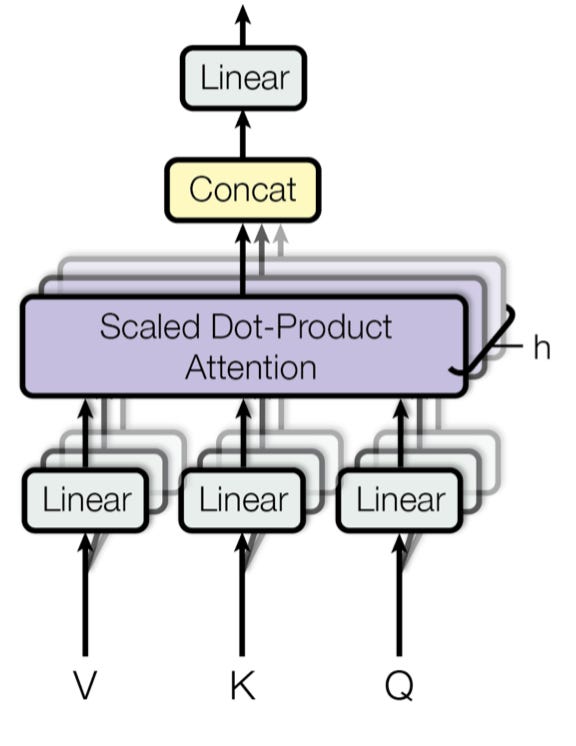

A statistician’s insiders view for how drug trials get planned, and thoughts about how the different ways a drug can affect different subpopulations while having the same average effect on the population.Paperswithcode.com has a new feature that allows you to explore papers by modeling method, and each modeling method has a fantastic graphic, like this one.

This is super cool.

Quantum Bayesianism suggest lack of objective truth even at the quantum-level.

Quantum theorist Christopher Fuchs explains his brain-child quantum Bayesian.

Once upon a time there was a wave function, which was said to completely describe the state of a physical system out in the world. The shape of the wave function encodes the probabilities for the outcomes of any measurements an observer might perform on it, but the wave function belonged to nature itself, an objective description of an objective reality.

Then Fuchs came along. Along with the researchers Carlton Caves and Rüdiger Schack, he interpreted the wave function’s probabilities as Bayesian probabilities — that is, as subjective degrees of belief about the system. Bayesian probabilities could be thought of as gambling attitudes for placing bets on measurement outcomes, attitudes that are updated as new data come to light. In other words, Fuchs argued, the wave function does not describe the world — it describes the observer. “Quantum mechanics,” he says, “is a law of thought.”

The article articulates some of the paradoxes of quantum theory and how they are resolved by the quantum Bayesian perspective that wave functions are subjective, and that objective reality is an illusion.

A Private View of Quantum Reality — Quanta Magazine

If you should look to statistical inference for truth, why should we trust scientists?

Putting another nail in objectivism’s coffin, Cassie Kozyrkov, in a recent article, does a great job articulating why statistical inference can’t give you objective truth. The gist is that all statistical models rely on assumptions, and assumptions are subjective.

That’s why I love deep learning! It’s assumption-free. No it is not; it has inductive biases.

In a follow-up article, Kozyrkov discusses the implications to understanding science and believing scientists.

The saddest equation in data science — Towards Data Science

Why do we trust scientists? — Cassie Kozyrkov

Know anyone who would be interested or might disagree?

AI Startups for the Rest of Us

Husband and wife say running an ML SaaS app is hard.

Reddit user pp314159 discusses the ML SaaS app he runs with his wife.

Running solo is hard. I'm running SaaS (https://mljar.com) where you can upload CSV data and tune ML models with AutoML. I'm running it with my wife. From what I observe, many more people are searching for ML jobs and tutorials than ML software. In fact, to make (large) money on ML service you will need to sell ML software/service to the enterprises (on-premise versions). Running a company that produces software and sells it to the enterprise is hard. Running it as a solo - very hard.

I would suggest a better model is to provide an ordinary SaaS that provides more value to the customer over time as data comes in, as opposed to selling ML as a service.

This NLP app is a bit flat.

I tried out an app called Fluently, which promises to “let you write fluently in any language.”

Grammarly proved to me that you could do great work with apply NLP to a writing task.

That said, it is not clear to me what value this adds on top of Google translate. It also has a dictionary and a thesaurus, but those tools are also just a search engine query away. Perhaps I’m missing something.

Summer of Deep Generative Models

Inverse graphics with convolutional neural network-based inference mimics biological visual perception mechanisms.

This week I read an article where the authors try to answer is how our visual experiences can be so rich in content and so robust to environmental variation while computing at such high speed?

The paper evaluates a model of visual perception called “analysis-by-synthesis” AKA “inverse graphics.” Inverse graphics is based on the theory that the brain not only recognizes and localizes objects but also makes inferences about the underlying causal structure of scenes. Further, it identifies many fine-grained details. For example, when seeing a face, we both recognize a person if they are familiar, and we perceive subtleties of expression even in unfamiliar people.

The problem is that usual computation models that take the inverse graphics approach rely on Bayesian inference algorithms. These algorithms tend to be slow, much slower than the nearly instantaneous visual perception that works in the human brain.

This paper substitutes traditional Bayesian inference algorithms with the deep convolutional neural networks popular in the deep learning research community. Their usage in this context is different from the conventional discriminative training settings of cov-nets. Here, the authors use it as the inference component in a generative model. See this primer on discriminitive vs generative models.

This paper presents a special example of this modeling approach and compares it to biological visual processing mechanisms.

Yildirim, I., Belledonne, M., Freiwald, W., & Tenenbaum, J. (2020). Efficient inverse graphics in biological face processing. Science Advances, 6(10), eaax5979.

Signals from China

TikTok seems to have race-based facial recognition for content moderation in production in China.