To be a better modeler, stop thinking in black-and-white

Plus, deep abstract concept learning

AltDeep is a newsletter focused on microtrend-spotting in data and decision science, machine learning, and AI. It is authored by Robert Osazuwa Ness, a Ph.D. machine learning engineer at an AI startup and adjunct professor at Northeastern University.

Last week…

I decided to make this newsletter a bit more accessible to people at the earlier stages of their journey in AI and data science. So I’m reshuffling topics a bit. I’m creating “Trending in AI,” which is more like research overviews. I also creating “Tips of the Trade,” which is focused on how to improve one’s skill set. “Ear to the Ground” will feature non-researchy trends.

Ear to the Ground

Curated posts aimed at spotting microtrends in data science, machine learning, and AI.

Prominent Youtuber on AI topics exposed for content theft

Siraj Raval is a prominent Youtuber who posts videos on AI topics. This is a bit gossipy. However, the thread is an interesting read. It reveals much about the broader phenomenon of people who want to learning about data science and AI, and pay money to do so.

Udacity had an interventional meeting with Siraj Raval on content theft for his AI course — /r/machinelearning

Trending in AI

High-level trends from the cutting-edge of AI research

An IQ test for deep learning

In this newsletter I’ve been paying attention to work from the deep learning research community that focuses on how to incorporate inductive biases, abstraction, and hierarchy into deep neural network architectures and training methods. Along these lines, this work by Deepmind came out last year, and was revisited again recently by AI blogger Jesus Rodriguez. I decided to add my own overview in this issue — Rodriguez’s post goes deep into the implementation, I’m far more interested in how Deepmind frames the problem of abstract concept learning.

Humans use abstract concepts learned everyday experience to interpret simple visual scenes. For example, by watching plants grow, they understand the abstract concept of “progression”.

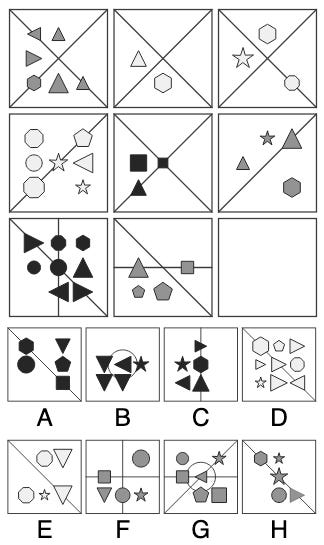

Deepmind built a generator for creating classic IQ test problems called matrix problems. In the following matrix problem, the test-taker must select the correct choice for the missing bottom right panel from the eight choices given.

Matrix questions involve a set of abstract object attributes (shape, shape counts, size, color, lines), and relations that connect these attributes across panels. For example, in the above example the “progression” relation shows up in the columns; as you move down the column, the number of objects increases by one. Other types of explicitly defined abstract relations exist here across the rows and columns, and only one answer of the eight choices is consistent with all of them.

The generator can take this small set of underlying factors and relations and generate a large number of unique questions. The team constrained the combinations of factors and relations so that they were different across training and tests sets, to measure how well their models could generalise to held-out test sets. For example, in the “progression” abstraction might appear in the training set but only for size (e.g. small, medium, big) and counts (e.g. 2, 3, 4), while the test set has “progression” for color (light grey, dark grey, black). If a model performs well on this test set, it would provide evidence for an ability to infer and apply the abstract notion of progression, even in situations in which it had never previously seen a progression.

The team came up with a new network architecture called a Wild Relation Network or WReN that is more adept than standard models at answering these complex visual reasoning questions. However, all the models performed poorly precisely when they tried predicting an answer in a case when a relation seen in the training data was applied to new factors in the test set. This is not a shocker, we knew that deep learning is not generally good at extrapolating to new situations the way humans do by reasoning on abstract concepts.

The interesting result is that they found that performance for this task improved when the model was trained to predict not only the correct answer, but also the ‘reasons’ for the correct answer. In other words, given a question as an input, the output label is not just the right answer, but the factors and relations that a human test-taker would need to identify to get the right answer.

The take-away is that one way to train deep nets to reason on abstract concepts when making predictions is to explicitly tell them what abstract concepts they should predict with.

This is ideal in settings where the abstract concepts can be clearly defined and separated from one another. That said, I expect human abstract thinking is context-dependent, nebulous, and hierarchical. Still, this is cool stuff.

Barrett, David GT, et al. "Measuring abstract reasoning in neural networks." arXiv preprint arXiv:1807.04225 (2018). — Link is to Deepmind blog summary

Are Neural Networks Capable of Abstract Reasoning? Let’s Use an IQ Test to Prove It — Jesus Rodriguez, Towards Data Science

Tricks of the trade

Write-ups and curated links on perfecting the craft of data science.

What Kolmogorov complexity says about machine learning

This excellent post explains how to understand the trade-off between computation and memory in machine learning. If you are like me and have an approach to statistics and machine learning that focuses on representing the data-generating process as a program, then you’ll likely be bookmarking this post.

Machine Learning, Kolmogorov Complexity, and Squishy Bunnies — The Orange Duck

“Dichotomania” — how your black and white view of the world might lead to bad modeling

Bayesian statistician and prolific blogger Andrew Gelman recently reiterated his opinion that a “statistical thinker” thinks more continuously than the hoi polloi, who think in discrete terms. Another eminent statistician called this manner of discrete thinking “dichotomania”:

dichotomania [is] the compulsion to perceive quantities as dichotomous even when dichotomization is unnecessary and misleading…

In other words, dichotomania is the tendency for humans to see problems as black and white rather than on a spectrum. Gelman argues that due to this bias, data scientists prematurely discretize variables when modeling a problem.

For example, suppose you want to predict whether or not a sports team will win. Rather than model the probability a team will win, you should model the number of points each team scores, and based derive the prediction of the winner from predicted scores.

I think this approach to modeling is good in theory but hard in practice. Better to start with some dumb discrete model that gets you an answer, and can demonstrate progress to your stakeholders. Then incrementally increase complexity, including adding more nuanced continuous variables, until doing so yields no further performance gains with respect to your objective function.

However, if you were trying to build a machine that thinks like a human, perhaps dichotomous thinking is the way to go. Perhaps dichotomous thinking is a cognitive heuristic we evolved to make decisions quickly and with minimal mental effort. Gelman is advocating against dichotomania in the context of doing science, not necessarily about whether or not one should take the stairs or go out the window in a house fire. Sanders comments to this effect on Gelman’s blog post:

I do think dichotomizing or categorizing is a compulsion in the ordinary sense of the word, not just an acquired habit, in that it has an innate, natural quality. In everyday life it’s a heuristic in constant service of decisions, like asking someone “is it cold out?” to decide whether to grab a coat. The trouble is that, like so many useful natural heuristics and much of uneducated common sense, it can and does subvert the more refined goals of scientific research, in just the way Andrew and many others describe.

Deterministic thinking (“dichotomania”): a problem in how we think, not just in how we act — Statistical Modeling, Causal Inference, and Social Science

Thinking like a statistician (continuously) rather than like a civilian (discretely) — Statistical Modeling, Causal Inference, and Social Science

Greenland, Sander. "Invited commentary: the need for cognitive science in methodology." (pdf link) American journal of epidemiology 186.6 (2017): 639-645.

Related and very much worth reading:

Tong, Christopher. "Statistical Inference Enables Bad Science; Statistical Thinking Enables Good Science." The American Statistician 73.sup1 (2019): 246-261.

AI Long Tail

Pre-hype AI microtrends in industry.

US AI to punish employees who are not “engaged” on Slack

Elin.ai provides a AI Slack bot that “tracks engagement metrics” for conversations employees hold on Slack. I don’t like to talk smack on companies on the Internet but this would be funny (employees would immediately game the system) if it weren’t so depressing.

AI makes creativity the new productivity

Artificial intelligence actually makes creativity more viable and efficient. Creative professionals we survey at Adobe say they spend about half their time on tasks that don’t require creative inspiration at all — searching for the right stock photo, carefully applying a mask to an object, or removing a car from video footage. Artificial intelligence can take over these mundane tasks, saving creative pros time for more engaging — and lucrative — truly creative endeavors.

Creativity Is the New Productivity — Marker

Data Sciencing for Fun and Profit

Emerging trends on the web that could be exploited for profit using quantitative techniques.

AI threatens the online poker industry

A few issues back I wrote about Pluribus, a Facebook poker bot that took an optimal solution for two players and extended it to multiple players. This post argues that Pluribus would kill the billion dollar online poker industry. There are enough details published about Pluribus for people to figure it out on their own though…

Facebook’s new poker-playing AI could wreck the online poker industry—so it’s not being released — MIT Technology Review