The Machine Learning Trade-Off that Most People Miss.

Computation vs. memory

Quick announcement. There is an interesting upcoming event for folks interested in MLOps. TWIMLcon: AI Platforms focuses on productionizing and scaling ML & AI. Use my code ROBERTNESS for discounted registration and join us in January!

The trade-off between memory and computation

We are used to thinking about machine learning trade-offs, such as variance vs. bias and precision vs. recall. Here is a third: computation vs. memory.

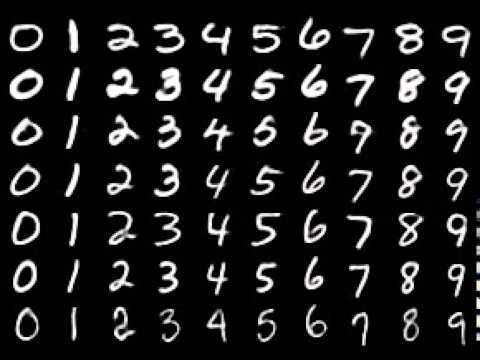

Suppose you are presented with the task of creating an algorithm that maps some input to some output. For example, the input could be images of hand-written digits, and the output could be the actual digits 0-9.

We might turn to machine learning to solve such a task.

Before we do, let's consider an even simpler case of mapping inputs to outputs, namely a mathematical function like sine. If you wanted to implement the sine function, you could write a program that computes the output, such as the algorithm used by that scientific calculator you used in high school.

Or you could write a lookup table with that maps a bunch of floating-point numbers between 0 and 2π, to the values are the corresponding output. This program relies on storing a table of answers in memory rather than computing answers on the fly.

Look-up tables and raw computation are two extremes in the memory-computation spectrum. Most programs fall in between these extremes. For example, we might construct a sine function that, when presented an input it hasn't seen before, computes and stores the output in memory. If the function receives the same input again, it looks up the stored output rather than repeating the computation.

When the problem is more complex, and we desire greater accuracy, we'll have to use more resources. But regardless of how much resources we need, those resources involve a trade-off between computation and memory.

Machine learning algorithms make this trade-off as well. They learn by optimizing parameters on data during training. Those learned parameter values require storing in memory. Generating an output given an input means doing computation over that input and those parameters stored in memory. In neural networks, computation is roughly proportional to the number of parameters in the model.

Share this post!

Rethinking the mental model for machine learning

Among seasoned practitioners, a common mental model for this machine learning task is as follows.

Some Platonic ideal of a rule-based program could take those input pixels, pass them through a bunch of logic, and return the right digit.

That ideal program is too difficult to implement.

So instead, we collect data and training a machine learning algorithm to approximate that program.

The implicit assumption is that if we could implement that Platonic program, we would. After all, if we had such a program, we wouldn't have to worry about pesky machine learning problems like collecting and curating data, overfitting, or maintaining code. Instead, we'd have a conventional program that we could do useful things with, such as formal verification. But, alas, we turn to machine learning because that Platonic program is out of reach.

Once we consider the computation-memory trade-off, we realize this mental model misses a key insight; even when we could implement that ideal rule-based program, we still might want to use machine learning if it gives us a more favorable position on the computation-memory than that program.

For example, suppose that this ideal rule-based program is computationally expensive — perhaps too expensive for our cloud compute budget or for our hardware constraints. Examples could be physics simulations or high-speed algorithmic trading. In that case, we could rely on a machine learning algorithm that approximates that program. This machine learning algorithm uses training to amortize away much of that computation into parameter values stored in memory.

Thus, even if we could implement the ideal program that solves the problem, we still might want to use machine learning because it is a better fit for our resource constraints.

Go Deeper

Holden, Daniel, et al. "Subspace neural physics: Fast data-driven interactive simulation." Proceedings of the 18th annual ACM SIGGRAPH/Eurographics Symposium on Computer Animation. 2019.