You should join this superforecasting competion

plus game theorying for fun and profit, and the new business of synthesizing data

AltDeep is a newsletter focused on microtrend-spotting in data and decision science, machine learning, and AI. It is authored by Robert Osazuwa Ness, a Ph.D. machine learning engineer at an AI startup and adjunct professor at Northeastern University.

Overview:

Negative Examples: More Venn diagrams

Dose of Philosophy: Or, A Primer in Sounding Not Stupid at Dinner Parties: New business trend: Venn diagrams

Ear to the Ground: The AI chip market, how to run an AI startup, and the Lottery Ticket Hypothesis

AI For the Rest of Us: Synthesizing data is now a business trend

Data-Sciencing for Fun & Profit: Game theorying for fun and profit: The Weird ETH Auction

The Essential Read: You should join this superforecasting competition

Negative Examples

Trending items worth knowing about but not really worth reading about.

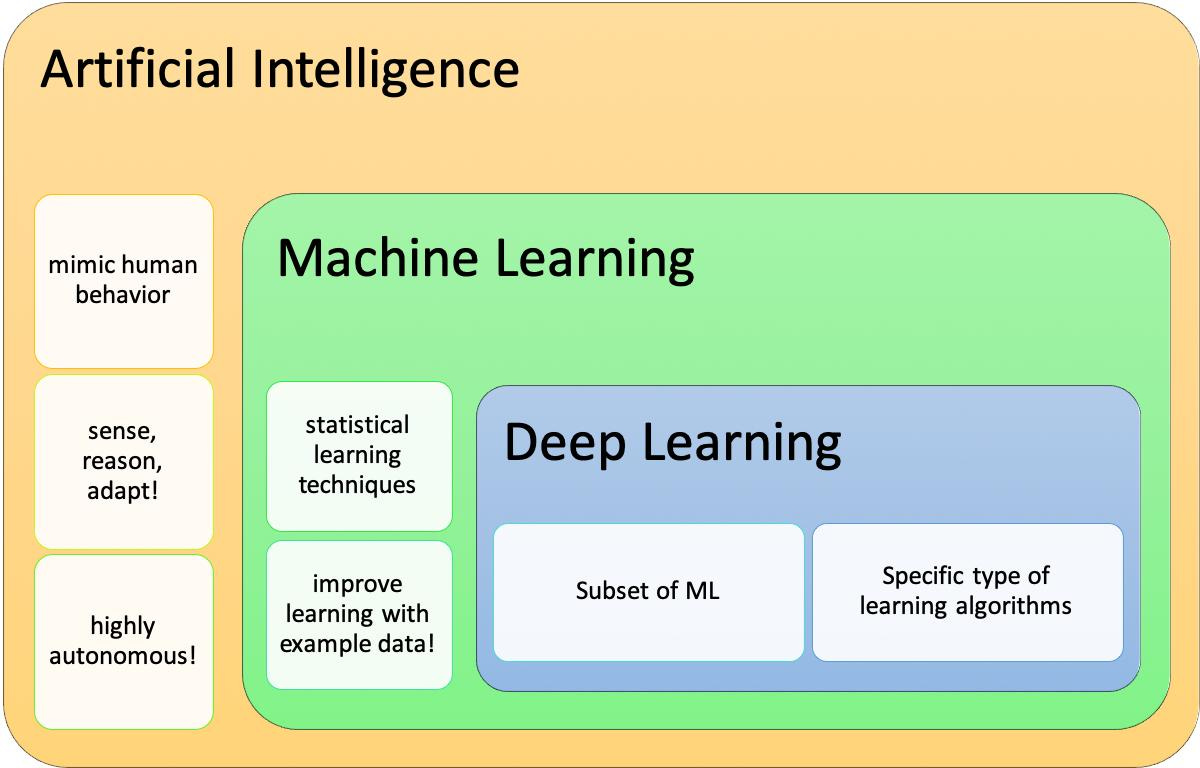

This week featured lots of yelling and Venn diagrams from people who honorable devote their time to policing vocabulary and making Venn diagrams.

Dose of Philosophy: Or, A Primer in Sounding Not Stupid at Dinner Parties

Russell's Theory of Descriptions

Imagine you are trying to write an NLP algorithm that reads newspaper articles and records the information into some knowledgebase. The challenge of the algorithm is to assign meanings to phrases. At first, you write the algorithm such that the meaning of a definite description, for example, "the current President of the US," is equivalent to the object the phrase refers to, in this case, Trump.

So what then do you do when you hit the sentence "The current King of England"? Currently, there is no King of England, so this sentence refers to nothing. Your algorithm would call this sentence meaningless, even though to the human reader, it is not meaningless.

Bertrand Russell's solution is to translate the sentence containing this phrase into a basic logical form. For example, translate the "The present king of England is bald" into "There is one and only one current king of England, and he is bald." Russell argues that all such sentences have an equivalent logical representation. The translation solves the problem because it is meaningful to a human and straightforward for an algorithm to evaluate logically. In this example, this union of two predicates evaluates to "false."

Ear to the Ground

Report forecasts AI chip market will grow to over $80 billion by 2027.

Really good advice for machine learning startups — What I’ve Learned Working with 12 Machine Learning Startups by Daniel Shenfeld

Really interesting blog post and paper from Microsoft researchers who propose a Bayesian method for balancing exploration and exploitation in recommendation systems. They are also entirely serious when they give this cryptic Orwellian example:

This tension, between exploration, exploitation and users’ incentives, provides an interesting opportunity for an entity – say, a social planner – who might manipulate what information is seen by users for the sake of a common good, balancing exploration of insufficiently known alternatives with the exploitation of the information amassed.Uber published a paper describing their implementation of the Lottery Ticket Hypothesis in the training of neural networks. Frankle and Carbin’s Lottery Ticket Hypothesis is that dense, randomly-initialized, feed-forward networks contain subnetworks that - when trained in isolation - reach test accuracy comparable to the original network in a similar number of iterations.

AI for the Rest of Us

New business trend: synthetic data

I am seeing a business trend centering on ML-generated synthetic data. Some prominent examples are the generation of deep fakes (which does have some non-evil applications), style-transfer, text generation, automatic summarization, and caption generation.

This is a surprising phenomenon given the two main opposing worldviews about data scarcity:

Too much data. There are too much data, and its all I can do to stay on top of all the tools and cloud services to manage the deluge. Common amongst people with computer science backgrounds.

Not enough good data. Most of this so-called big data is bias or just not relevant, and if you do find a good set of data, by the time you finish munging it you find you do not have enough to answer the interesting questions.

Either viewpoint looks at synthetic data skepticism; camp 1 asks “why do we need even more data?”, while camp 2 asks, “what can you learn from regurgitated data?”

However, the investors and the market are providing validation to the data synthesis use case. When you think about it, it is not surprising that data synthesis focuses on media (images, video, text), which are hard things to generate in machine learning, and since creating media is the primary goal of several large industries.

Data Sciencing for Fun and Profit

Game theorying for fun and profit: The Weird ETH Auction

The programmable cryptocurrency ETH is enabling a new crop of monetary “apps.” I came across an ETH auction that provides an interesting take on a dollar auction.

In this auction, the highest bidder gets a 1.0 ETH cash prize (currently $230.5 at the time of writing). The minimum bid to participate is 0.001 ETH ($.23 at the time of writing). A bidder places a bid by paying out the bid amount.

Every time a bidder places a bid, the auction is extended 6 hours, giving time to other bidders to counterbid.

When you place a bid, you are the highest bidder for some amount of time. As soon as two other bidders outbid you, you are refunded the amount you paid out for your bid.

As mentioned above, the highest bidder when the auction closes gets the cash prize, and all bidders who are not the highest or second highest bidders get their bid amounts refunded. However, if you are the second highest bidder when the auction closes, then you lose the amount you bid.

So far, this description matches the traditional form of the dollar auction. In its original conception, once the bids exceed the prize amount, the top two bidders have an incentive to enter into a kind of war of attrition, where they continue to place bids to avoid being the second highest bidder.

This auction has an added twist. At any time, anyone could increase the prize by paying in a minimum of 0.1 ETH. The last person to increase the prize at the end of the auction wins the sum of the highest and second highest bids.

Dollar auctions are a game where the house (specifically the auctioneer) always wins, by way of fees. Is this is a scam? Are casinos scams?

Dollar Auction — Wikipedia

The Essential Read

You should join this superforecasting competition

If you read data science popular non-fiction, you might have come across Superforecasting: The Art and Science of Prediction by Wharton professor Philip E. Tetlock and Dan Gardner. If you haven't, I recommend it.

Tetlock's research identified that while most of the geopolitical forecasts of purported experts don't do much better than chance, the are some so-called "superforecasters" who consistently make quality predictions.

Now the US intelligence research agency IARPA has launched a new superforecasting contest targeting geopolitical events such as elections, disease outbreaks, and economic indicators. The winner gets $250,000 in prize money.

Contestants get access to information on winning methodologies from the previous competition and can use computers.

You should join, even if you do not fancy yourself an expert in geopolitics. The previous IARPA-ran forecasting contest was from 2011 to 2015, which Tetlock's team won. His team championed non-experts, who turned out, did pretty well in niche categories despite lacking subject matter expertise.

Another reason to join is that you can win with solid cognitive reasoning, as opposed to brute-forcing it with statistical prediction algorithms. If the goal were to predict the outcome of a stock price, you are probably better off not over-thinking it, and instead taking historical price data and fitting an LSTM and spending your time backtesting, optimizing parameters, and adding constraints to your algorithm that add robustness to its predictions.

However, one cannot bypass human reasoning when it comes to predicting the outcome of US-China Trade Talks or the number of nukes North Korea is going to test in the next year. Firstly, the Augean task of finding, selecting, and munging relevant data needed to produce a machine learning-based prediction that answers this sort of question would itself be a substantial cognitive effort.

According to Tetlock's research, the ideal process for answering this kind of question is more akin to the process of answering a job interview question one might get at McKinsey & Company or a tech company. You answer these questions by breaking down the overall event into a set of smaller, more cognitively digestible events, and then stringing them together into a plausible narrative. You evaluate the probabilities of outcomes of the overall event by evaluating the probability of each sub-event.

Prediction algorithms can make this reasoning process more powerful; for example, you can develop those probabilistic narratives into a Monte Carlo simulation. However, they can't replace this reasoning process (short of having strong AI).

So the time constraint should be manageable. If you lack for time, you should be able to come up with a good prediction in the time it takes to reason through a McKinsey interview question, say, over your lunch break?